A short promotional video of the Stan’s Cafe performance installation, Of All The People In All The World, in which rice is used to represent human statistics.

[via infosthetics]

urging governments to make data about canada and canadians free and accessible to citizens

You are currently browsing the monthly archive for January 2008.

A short promotional video of the Stan’s Cafe performance installation, Of All The People In All The World, in which rice is used to represent human statistics.

[via infosthetics]

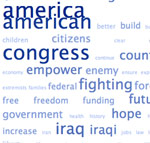

Civic data comes in many guises, and this is a neat way to analyze a politician’s speeches:

Last year’s 2007 State of the Union Tag Cloud was such a hit, I decided to follow up again this year…

[from Boing Boing]

Would be nice to do this systematically for every politician, maybe just based on their web pages? Or published documents, anyway.

Well, why not? Why not, indeed? Here is:

Stephen Harper:

Stephane Dion:

Jack Layton:

Gilles Duceppe:

MySociety has released some very useful and sexy interactive travel-time maps for the UK using public data.

This is a most interesting use of political geodemographics – The Copyright MPs.

Ted at GANIS blog introduces this very interesting data visualization initiative – The British Columbia Atlas of Wellness.

Environment XML is now live http://www.haque.co.uk/environmentxml/live/ enabling people to tag and share remote realtime environmental data; if you are using Flash, Processing, Arduino, Director or any other application that parses XML then you can both respond to and contribute to environments and devices around the world.

EnvironmentXML proposes a kind of “RSS feed” for tagged environmental data, enabling anyone to release realtime environmental data from a physical object or space in XML format via the internet in such a way that this content becomes part of the input data to spaces/interfaces/objects designed by other people.

[more…]

From Wired:

Sources at Google have disclosed that the humble domain, http://research.google.com, will soon provide a home for terabytes of open-source scientific datasets. The storage will be free to scientists and access to the data will be free for all. The project, known as Palimpsest and first previewed to the scientific community at the Science Foo camp at the Googleplex last August, missed its original launch date this week, but will debut soon.

Building on the company’s acquisition of the data visualization technology, Trendalyzer, from the oft-lauded, TED presenting Gapminder team, Google will also be offering algorithms for the examination and probing of the information. The new site will have YouTube-style annotating and commenting features.

[Via Open Access News]

The Federation of Canadian Municipalities has just released its Quality of Life Reporting System (Press Release) – Trends & Issues in Affordable Housing and Homelessness (Report in pdf). If you go to the end of the report & this post you will find the data sources required to write this important report on the situation of housing & homelessness in Canadian Cities.

NOTE – these public datasets were purchased to do this analysis. It costs many many thousands of dollars to acquire these public data. Public data used to inform citizens on a most fundamental issue – shelter for Canadians. Statistics Canada does not generally do city scale analysis as it is a Federal agency and the Provinces will generally not do comparative analysis beyond cities in their respective provinces. This type of cross country and cross city analysis requires a not-for-profit organization or the private sector to do the work. We are very fortunate that the FCM has the where-with-all to prepare these reports on an ongoing basis. This is an expensive proposition, not only because subject matter specialists are required, much city consultation is necessary for contextual and validation reasons but also because the datasets themselves are extremely costly. There is no real reason to justify this beyond cost recovery policies. Statistics Canada and CMHC are the worst in this regard. The other datasets used are not readily accessible to most. While the contextual data requires specially designed surveys.

The documents referred to in this report were however freely available but not readily findable/discoverable as there is no central repository or portal where authors can register/catalogue their reports. This is unfortunate as it takes a substantial amount of effort to dig up specialized material from each city, province or federal departments and NGOs.

Public (but not free) Datasets Used in the report:

Report and Press Release Via Ted on the Social Planning Network of Ontario Mailing List.

Peter Suber reports that:

The Scientific Council of the European Research Council has released its Guidelines for Open Access [pdf]…

Here is the text:

- Scientific research is generating vast, ever increasing quantities of information, including primary data, data structured and integrated into databases, and scientific publications. In the age of the Internet, free and efficient access to information, including scientific publications and original data, will be the key for sustained progress.

- Peer-review is of fundamental importance in ensuring the certification and dissemination of high-quality scientific research. Policies towards access to peer-reviewed scientific publications must guarantee the ability of the system to continue to deliver high-quality certification services based on scientific integrity.

- Access to unprocessed data is needed not only for independent verification of results but, more importantly, for secure preservation and fresh analysis and utilisation of the data.

- A number of freely accessible repositories and curated databases for publications and data already exist serving researchers in the EU. Over 400 research repositories are run by European research institutions and several fields of scientific research have their own international discipline-specific repositories. These include for example PubMed Central for peer-reviewed publications in the life sciences and medicine, the arXiv Internet preprint archive for physics and mathematics, the DDBJ/EMBL/GenBank nucleotide sequence database and the RSCB-PDB/MSD-EBI/PDBj protein structure database.

- With few exceptions, the social sciences & humanities (SSH) do not yet have the benefit of public central repositories for their recent journal publications. The importance of open access to primary data, old manuscripts, collections and archives is even more acute for SSH. In the social sciences many primary or secondary data, such as social survey data and statistical data, exist in the public domain, but usually at national level. In the case of the humanities, open access to primary sources (such as archives, manuscripts and collections) is often hindered by private (or even public or nation-state) ownership which permits access either on a highly selective basis or not at all.

Based on these considerations, and following up on its earlier Statement on Open Access (Appendix 1) the ERC Scientific Council has established the following interim position on open access:

- The ERC requires that all peer-reviewed publications from ERC-funded research projects be deposited on publication into an appropriate research repository where available, such as PubMed Central, ArXiv or an institutional repository, and subsequently made Open Access within 6 months of publication.

- The ERC considers essential that primary data – which in the life sciences for example could comprise data such as nucleotide/protein sequences, macromolecular atomic coordinates and anonymized epidemiological data – are deposited to the relevant databases as soon as possible, preferably immediately after publication and in any case not later than 6 months after the date of publication.

The ERC is keenly aware of the desirability to shorten the period between publication and open access beyond the currently accepted standard of 6 months.

Peter has some good analysis.

What is the NRC’s policy on Open Access?

Comments on Posts